Hosting a WordPress site is no different from hosting any other PHP and MySQL based application. A traditional LEMP stack will get you most of the way, which is why services like Forge can host multiple applications from Laravel to WordPress on a single server.

While a traditional LEMP stack will work for hosting WordPress, it won't perform optimally, and it certainly won't be able to handle any significant amount of traffic. However, with a few special considerations, WordPress can be both snappy and scalable. I’ve spent the last year building our new app, SpinupWP, that spins up optimal servers for WordPress, incorporating a lot of these considerations. However, in this article, I'm going to discuss two simple caching mechanisms that you can implement to ensure WordPress is performant on a LEMP stack no matter where you’re setting your servers up. Let's start with object caching.

Object Caching

As with most database-driven content management systems, WordPress relies heavily on the database. A clean install of WordPress 5.0 with the Twenty Nineteen theme activated will execute a total of 19 SQL queries on the homepage alone. That's before you throw third-party plugins into the mix. It's not uncommon for over 100 SQL queries to be executed on every page load, which can be a huge strain on the server.

To combat this, WordPress introduced an internal object cache that stores data in PHP memory (including the results of database queries). However, the object cache is non-persistent by default - meaning that the cache is regenerated on every single page load, which is extremely inefficient. Luckily, it's possible for WordPress to utilize an external in-memory datastore such as Redis. This can dramatically reduce the number of database queries on each page load.

On Ubuntu, Redis can be installed like so:

sudo apt-get update

sudo apt-get install -y redis-serverOnce Redis has been installed you'll need to install the Redis Object Cache WordPress plugin. This will instruct WordPress to store it's object cache data in Redis and persist the data across requests.

While this is a huge improvement (down from 19 queries to 3 queries), it doesn't completely remove the reliance on the database, which is often the biggest bottleneck in WordPress. This is because the SQL queries to build the post index will always be executed as the results are not cached. This leads us on to full page caching...

Page Caching

A full page cache is hands down the most important thing you can implement to ensure WordPress doesn't fall over under load. It'll also improve response time for individual page requests. While there are a number of WordPress cache plugins available, most won't perform as well as a server-side solution (because the request is still being handled by PHP. As we're already using LEMP we can utilize Nginx FastCGI caching, which will cache a static HTML version of each page. Subsequent visits will serve the static HTML version without ever hitting PHP or MySQL.

To enable Nginx FastCGI caching you'll need to adjust a few directives to your existing configs. The following should be added to the top of each virtual host file before the server block:

fastcgi_cache_path /path/to/cache/directory levels=1:2 keys_zone=acmepublishing.com:100m inactive=60m;

This creates the cache zone and indicates the directory where the cache should be stored (see this doc for more info on what the other arguments mean). Next, when proxying requests to PHP you can inform Nginx to first check that a cache key exists for the current request, before sending it off to PHP:

location ~ \.php$ {

try_files $uri =404;

include fastcgi.conf;

fastcgi_pass unix:/var/run/php/php7.3-fpm.sock;

fastcgi_cache_bypass $skip_cache;

fastcgi_no_cache $skip_cache;

fastcgi_cache acmepublishing.com;

fastcgi_cache_valid 60m;

}The $skip_cache variable allows you to bypass the cache. A typical strategy is to only cache GET requests for non-logged in users. It's also common to skip requests which contain a query string:

# Don't skip cache by default

set $skip_cache 0;

# POST requests should always go to PHP

if ($request_method = POST) {

set $skip_cache 1;

}

# URLs containing query strings should always go to PHP

if ($query_string != "") {

set $skip_cache 1;

}

# Don't cache uris containing the following segments

if ($request_uri ~* "/wp-admin/|/wp-json/|/xmlrpc.php|wp-.*.php|/feed/|index.php|sitemap(_index)?.xml") {

set $skip_cache 1;

}

# Don't use the cache for logged in users or recent commenters

if ($http_cookie ~* "comment_author|wordpress_[a-f0-9]+|wp-postpass|wordpress_no_cache|wordpress_logged_in|edd_items_in_cart|woocommerce_items_in_cart") {

set $skip_cache 1;

}The final piece of the caching puzzle is to ensure Nginx can cache requests which have Cache-Control and Set-Cookie headers. If WordPress uses one of these headers then Nginx might not be able to cache the request so we can tell Nginx to ignore them. We also need to set the cache key and tell Nginx how to handle cached entries which have expired:

fastcgi_cache_key "$scheme$request_method$host$request_uri";

fastcgi_cache_use_stale error timeout updating invalid_header http_500;

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;A basic Nginx config might look something like this:

fastcgi_cache_path /sites/example.com/cache levels=1:2 keys_zone=example.com:100m inactive=60m;

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name example.com;

root /sites/example.com/public;

ssl_certificate /etc/letsencrypt/live/example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/example.com/privkey.pem;

index index.php;

fastcgi_cache_key "$scheme$request_method$host$request_uri";

fastcgi_cache_use_stale error timeout updating invalid_header http_500;

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;

add_header Fastcgi-Cache $upstream_cache_status;

# Don't skip cache by default

set $skip_cache 0;

# POST requests should always go to PHP

if ($request_method = POST) {

set $skip_cache 1;

# URLs containing query strings should always go to PHP

if ($query_string != "") {

set $skip_cache 1;

# Don't cache uris containing the following segments

if ($request_uri ~* "/wp-admin/|/wp-json/|/xmlrpc.php|wp-.*.php|/feed/|index.php|sitemap(_index)?.xml") {

set $skip_cache 1;

# Don't use the cache for logged in users or recent commenters

if ($http_cookie ~* "comment_author|wordpress_[a-f0-9]+|wp-postpass|wordpress_no_cache|wordpress_logged_in|edd_items_in_cart|woocommerce_items_in_cart") {

set $skip_cache 1;

include fastcgi-cache.conf;

location / {

try_files $uri $uri/ /index.php?$args;

location ~ \.php$ {

try_files $uri =404;

include global/fastcgi-params.conf;

fastcgi_pass $upstream;

fastcgi_cache_bypass $skip_cache;

fastcgi_no_cache $skip_cache;

fastcgi_cache example.com;

fastcgi_cache_valid 60m;

}

}Benchmarks

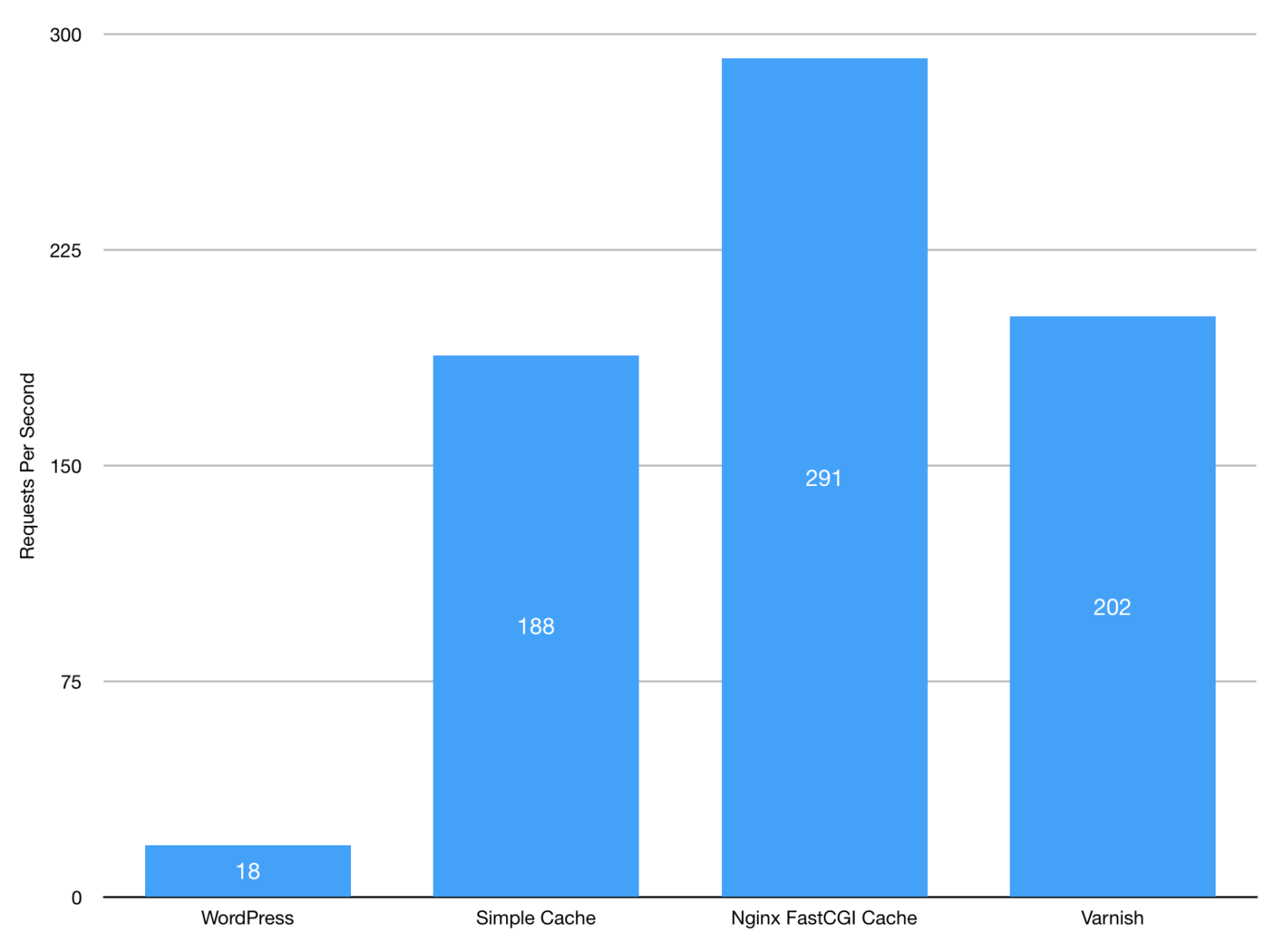

Let's compare a few common caching solutions to see how they measure up. First I'll test WordPress without any caching, then a WordPress cache plugin (Simple Cache), followed by Nginx. I'll also include Varnish to demonstrate that you don't need additional server software to perform simple caching.

Let's use ApacheBench to simulate 10,000 requests, with a concurrency of 100 requests. Meaning, ApacheBench will send a total of 10,000 requests in batches of 100 at a time.

ab -n 10000 -c 100 https://siteunderload.com/For the non-cached version, I will use a concurrency of 20 requests because WordPress will never be able to handle that amount of concurrency without caching.

ab -n 10000 -c 20 https://siteunderload.com/Requests Per Second

Source: SpinupWP

As expected, WordPress doesn’t perform well because both PHP and MySQL are involved with handling the request. Simply taking the database server out of the equation by enabling a page cache plugin gives a 10x increase in requests. This is taken even further by using a server-side caching solution so that PHP is also bypassed.

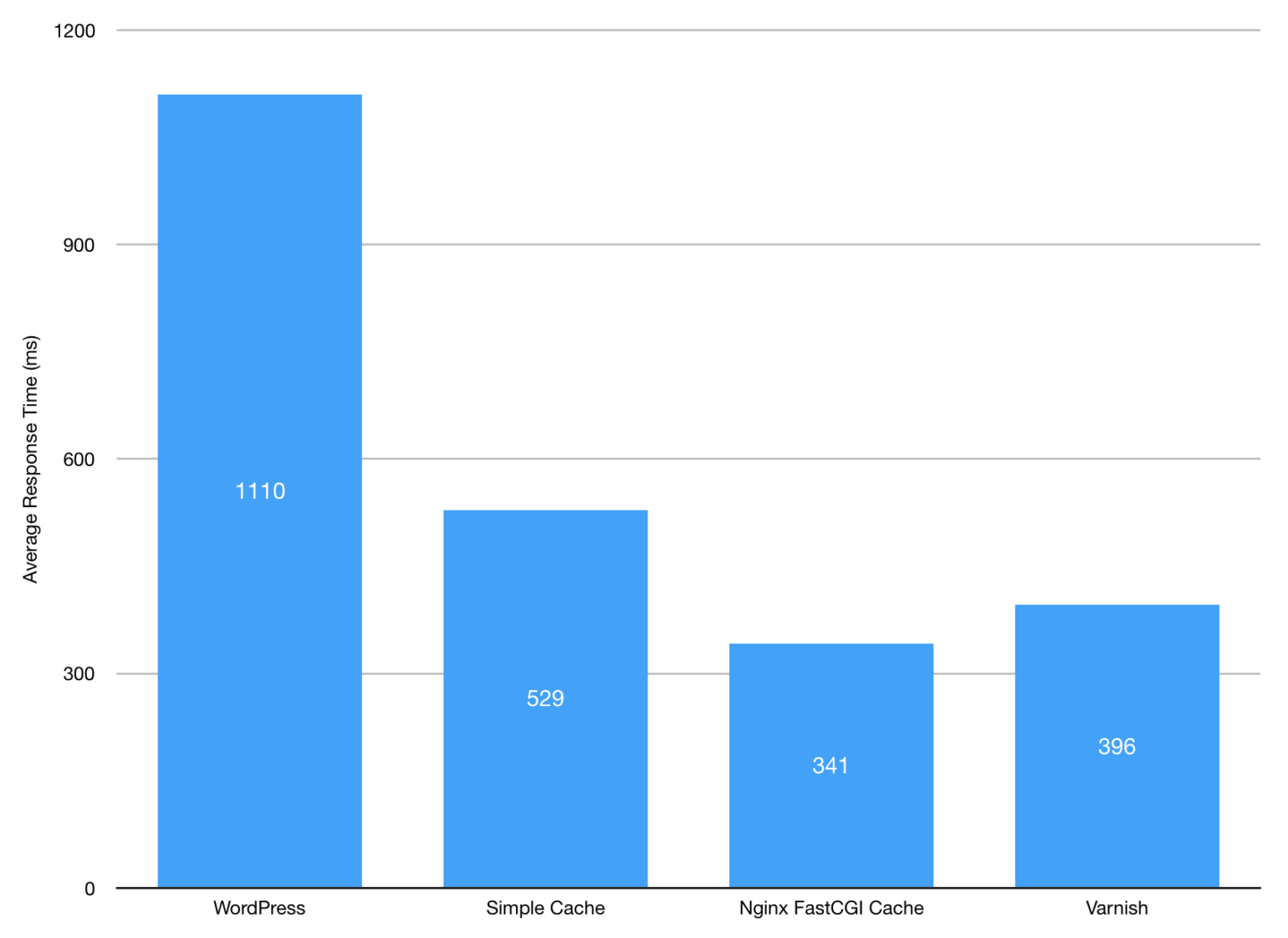

Average Response Time (ms)

Source: SpinupWP

WordPress once again doesn't perform well because of the number of moving parts required in handling the request (Nginx, PHP and MySQL). Generally, the fewer moving parts you have in a request lifecycle, the lower the average response time will be. That’s why Nginx FastCGI caching performs so well because it only has to serve a static file from disk (which will likely be cached in memory due to the Linux Page Cache).

Conclusion

As you can see from the benchmarks, WordPress doesn't perform well under load. However, with a few simple modifications to your LEMP stack, you can improve the performance dramatically. Looking to take things further? Check out A CDN Isn’t a Silver Bullet for Performance or save yourself the hassle and spin up a server with SpinupWP.

About the Author

Ashley is a former SAC Technician who built servers for the Royal Air Force & a PHP developer with a fondness for hosting, server performance and security. He’s the author behind our popular Host WordPress Yourself article series as well as one of the developers behind SpinupWP, the new modern server control panel for WordPress.